![MLOps Maturity Model [M3] Hero Image](https://datatron.com/wp-content/uploads/M3HeroImage.png)

MLOps Maturity Model (M3) – What’s Your Maturity in MLOps?

Every journey of 1,000 miles begins with a single step and your AI/ML journey into gaining business value from your models in production is no different. While every business is unique with varying resources, teams, tech stacks, and priorities, we’ve found that they can be clustered into five distinct stages which we’ve defined as Exploration, Prototyping, Realization, Institutionalization, and Mature. This article will discuss each checkpoint by examining in broad strokes the measures of Ideation, Team, Stack, Process, and Outcome. For a more detailed view, you can download our infographic below.

It’s important to note again that each company’s journey is unique and may not exactly follow the beaten path. This article is your map to help you determine where you are in this journey, assess your organization’s willingness to adapt to accelerate promotion to the next stage, and shine a light on practices that are hindering or could help your evolution. Happy ML’ing!

|

×

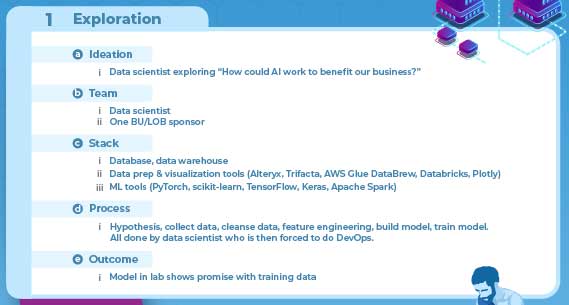

Stage 1: Exploration

Most companies start here. Someone says, “Can data solve this problem?” If the company doesn’t already have a data scientist, one is hired. They bring their own stack, craft and execute their experiments, and build the model. Because there isn’t a DevOps peer, they are also in charge of deploying the model. At this point, the model shows promise in the lab. Everyone involved is looking forward to the deployment.

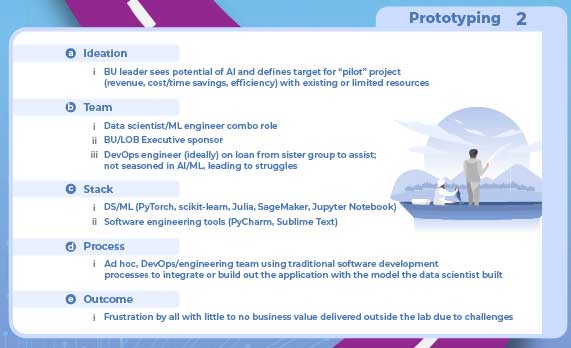

Stage 2: Prototyping

Now you’ve reached the second Stage. With the promising model in hand, the department lead decides to go a step farther and set a pilot project with KPIs. The data scientist isn’t skilled in DevOps so one is borrowed (or hired) to assist in the project. The stack now includes traditional DevOps tools to complement the data science stack. Because of the newness of the program and perhaps the unfamiliarity of DevOps when deploying AI/ML models, the process is very piecemeal, filled with haphazard stopgap solutions. The model shows little to no promise outside of the lab which leads to team-wide, department-wide, and sometimes company-wide frustrations.

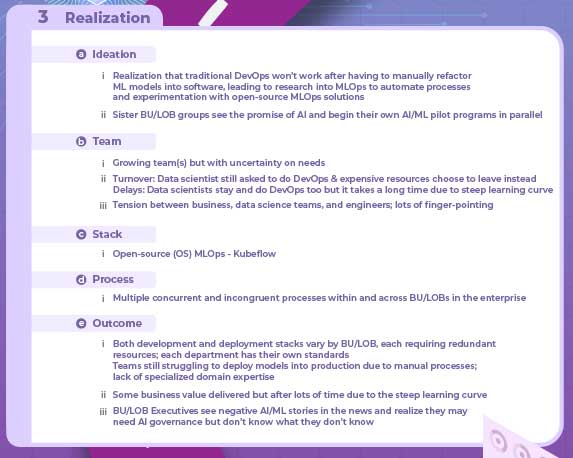

Stage 3: Realization

It’s a bit of a climb to get to this Stage. A detailed postmortem in the department shows that traditional DevOps is ill-suited for model deployment due to issues conforming ML models into software workflows. Alternatives to DevOps, such as MLOps, are explored. Several other departments see the potential of AI/ML and begin their own pilot project in tandem. The data scientist requests a new team member who is experienced in MLOps; the company turns them down as there are open source solutions available and they don’t want to pay for a new position without an ROI established. The traditional DevOps stack has been replaced with the open-source package for deployment but each department uses a different stack. The process is murky at best, in no small part because the data scientist is unfamiliar with MLOps and it has a steep learning curve. The myriad of AI/ML programs are operating concurrently but incongruently. The executives looking over this are confused because each department needs redundant positions and are still “making it up as they go.” The teams are still having issues deploying their models due to a lack of specialized expertise in the deployment fields. There is little to no ROI and the frustrations are unabated. Every person feels like it’s someone else’s fault that the deployment hasn’t happened yet.

|

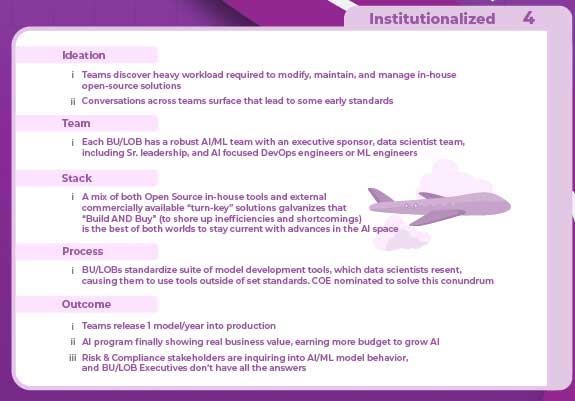

Stage 4: Institutionalization

Nearby is the fourth Stage. The steps needed to solve the frustrations are becoming clearer due to increased communications inter-departmentally. One point that becomes crystal clear is that the amount of work required to deploy, manage, and maintain a single model is much greater than anticipated. A second important point is a need for consistent processes and standards for model development and deployment. Each BU or LOB now has a dedicated team, complete with senior leadership positions, executive sponsors, and MLOps (or ML Engineers) to complement the data scientists. Scaling ML requires scaling headcount to support it. The stack is mostly the same but some of the load has been shifted to commercially available AI/ML solutions to maximize the ROI for each department. Standards are adopted to universalize the process, which causes some consternation among team members as many of them have preferred stacks. A few of them even break protocol because they know that a different stack is significantly more efficient for their project. The Center of Excellence (COE) is brought in to solve this issue and create parity across the organization. As a result of all of this hard work, the teams are releasing one model per year and generating enough ROI to increase the budget even more. These increases draw some questions from stakeholders about GRC and the department leads don’t have answers to every question.

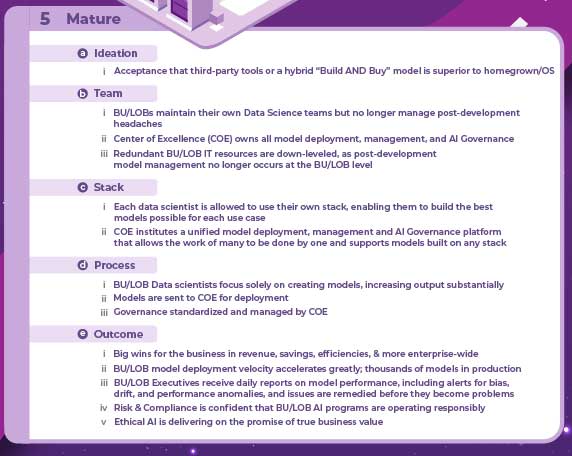

Stage 5: Mature

At last, you’ve reached the final Stage. The consensus is that a “Build And Buy” approach is better than completely outsourcing or running every process in-house. The departments still have their own teams for development. The COE is responsible for AI governance and post-development issues. Due to the COE’s new role, some of the redundant resources employed by the various departments are transferred or down-leveled. The COE oversees a unified model deployment platform that works irrespective of language, stack, or framework, allowing each team to use the best tools for each use case. The platform also catalogs all versions of the models for AI governance and monitors for potential issues. Each department is now solely focused on development and increases the number of models in production per year by a significant margin. This leads to a proportionate increase to the ROI. Reports come in daily regarding model performance, behavior, and equity. The previously unanswered questions about GRC now have satisfactory answers. Ethical AI has finally delivered and is solving the company’s problems.

Having made it to the final stage, the path from here on is easy to tread and clearer to see. Having reached maturity, your AI/ML ecosystem is building models, training them, deploying them, profiting from them, governing them, and retiring them if necessary. Congratulations on making it this far.

I mentioned at the top of the article that a journey can require long periods of rest. It could be that a particularly obstinate department head is sure that DevOps will work and therefore your journey stays in the Prototyping Stage longer than you’d like. Your top-tier data scientist that was involved from the Exploration Stage might be poached and the resulting brain drain has hampered your journey greatly (as an aside, this is another reason that proper model cataloging is important). Setbacks are almost certainly going to happen; the only thing you can do is keep moving forward. Our infographic can help to remind you which direction is forward.

In Conclusion

So where do you see yourself on this map? Are you in the Exploration Stage and want to avoid a majority of the headaches listed here? Do you find yourself in the Realization Stage and unsure what the next step should be? Have you reached the Institutionalized Stage and are having trouble figuring out which parts of the process to build and which to buy? Let us know! We’d love to hear from you and show how our services can help make your journey easier.

About Datatron

|