A Beginner-Friendly Explanation of How Neural Networks Work

What is a Neural Network?

A few weeks ago, when I started to learn about neural networks, I found that the quality of introductory information for such a complex topic didn’t exist. I frequently read that neural networks are algorithms that mimic the brain or have a brain-like structure, which didn’t really help me at all. Therefore, this article aims to teach the fundamentals of a neural network in a manner that is digestible for anyone, especially those that are new to machine learning. Before understanding what neural networks are, we need to take a few steps back and understand what artificial intelligence and machine learning are.

Artificial Intelligence and Machine Learning

Again, it’s frustrating because when you Google what artificial intelligence means, you get definitions like “it is the simulation of human intelligence by machines”, which although may be true, it can be quite misleading for new learners.

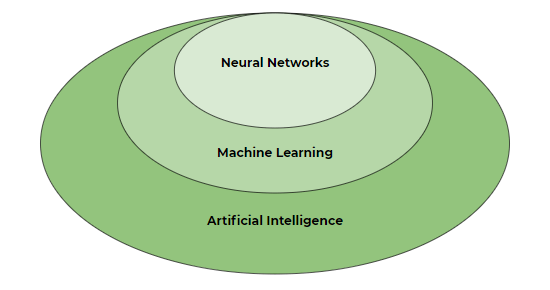

In the simplest sense, artificial intelligence (AI) refers to the idea of giving machines or software the ability to makes its own decisions based on predefined rules or pattern recognition models. The idea of pattern recognition models leads to machine learning models, which are algorithms that build models based on sample data to make predictions on new data. Notice that machine learning is a subset of artificial intelligence.

There are a number of machine learning models, like linear regression, support vector machines, random forests, and of course, neural networks. This now leads us back to our original question, what are neural networks?

Neural Networks

At its roots, a Neural Network is essentially a network of mathematical equations. It takes one or more input variables, and by going through a network of equations, results in one or more output variables. You can also say that a neural network takes in a vector of inputs and returns a vector of outputs, but I won’t get into matrices in this article.

The Mechanics of a Basic Neural Network

Again, I don’t want to get too deep into the mechanics, but it’s worthwhile to show you what the structure of a basic neural network looks like.

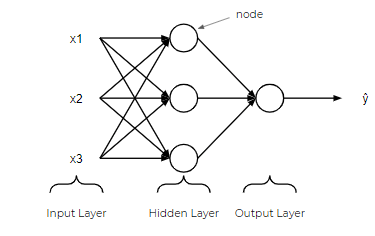

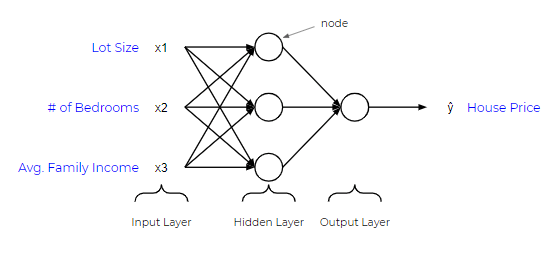

In a neural network, there’s an input layer, one or more hidden layers, and an output layer. The input layer consists of one or more feature variables (or input variables or independent variables) denoted as x1, x2, …, xn. The hidden layer consists of one or more hidden nodes or hidden units. A node is simply one of the circles in the diagram above. Similarly, the output variable consists of one or more output units.

A given layer can have many nodes like the image above.

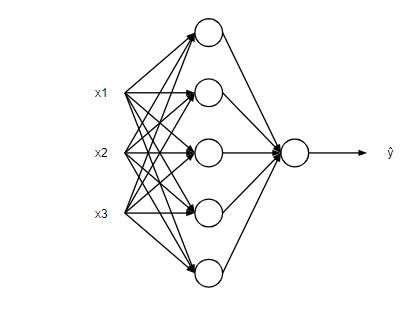

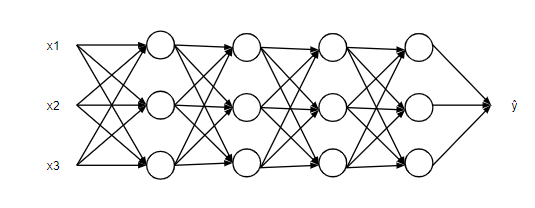

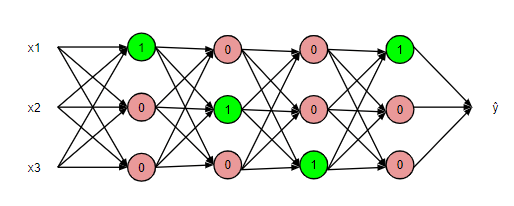

As well, a given neural network can have many layers. Generally, more nodes and more layers allows the neural network to make much more complex calculations.

Above is an example of a potential neural network. It has three input variables, Lot Size, # of Bedrooms, and Avg. Family Income. By feeding this neural network these three pieces of information, it will return an output, House Price. So how exactly does it do this?

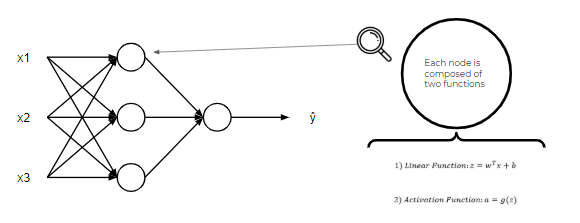

Like I said at the beginning of the article, a neural network is nothing more than a network of equations. Each node in a neural network is composed of two functions, a linear function and an activation function. This is where things can get a little confusing, but for now, think of the linear function as some line of best fit. Also, think of the activation function like a light switch, which results in a number between 1 or 0.

What happens is that the input features (x) are fed into the linear function of each node, resulting in a value, z. Then, the value z is fed into the activation function, which determines if the light switch turns on or not (between 0 and 1).

Thus, each node ultimately determines which nodes in the following layer get activated, until it reaches an output. Conceptually, that is the essence of a neural network.

If you want to learn about the different types of activation functions, how a neural network determines the parameters of the linear functions, and how it behaves like a ‘machine learning’ model that self-learns, there are full courses specifically on neural networks that you can find online!

Types of Neural Networks

Neural networks have advanced so much that there are now several types of neural networks, but below are the three main types of neural networks that you’ll probably hear about often.

Artificial Neural Networks (ANN)

Artificial neural networks, or ANNs, are like the neural networks in the images above, which is composed of a collection of connected nodes that takes an input or a set of inputs and returns an output. This is the most fundamental type of neural network that you’ll probably first learn about if you ever take a course. ANNs are composed of everything we talked about as well as propagation functions, learning rates, cost function, and backpropagation.

Convolutional Neural Networks (CNN)

A convolutional neural network (CNN) is a type of neural network that uses a mathematical operation called convolution. Wikipedia defines convolution as a mathematical operation on two functions that produces a third function expressing how the shape of one is modified by the other. Thus, CNNs use convolution instead of general matrix multiplication in at least one of their layers.

Recurrent Neural Networks (RNN)

Recurrent neural networks (RNNs) are a type of ANNs where connections between the nodes form a digraph along a temporal sequence, allowing them to use their internal memory to process variable-length sequences of inputs. Because of this characteristic, RNNs are exceptional at handling sequence data, like text recognition or audio recognition.

Neural Network Applications

Neural networks are powerful algorithms that have led to some revolutionary applications that were not previously possible, including but not limited to the following:

- Image and video recognition: Because of image recognition capabilities, we now have things like facial recognition for security and Bixby vision.

- Recommender systems: Ever wonder how Netflix is always able to recommend shows and movies that you ACTUALLY like? They’re most likely leveraging neural networks to provide that experience.

- Audio recognition: In case you haven’t noticed, ‘OK Google’ and Seri have gotten tremendously better at understanding our questions and what we say. This success can be attributed to neural networks.

- Autonomous driving: Lastly, our progression towards perfecting autonomous driving is largely due to the advancements in artificial intelligence and neural networks.

Summary

To summarize, here are the main points:

- Neural networks are a type of machine learning model or a subset of machine learning, and machine learning is a subset of artificial intelligence.

- A neural network is a network of equations that takes in an input (or a set of inputs) and returns an output (or a set of outputs)

- Neural networks are composed of various components like an input layer, hidden layers, an output layer, and nodes.

- Each node is composed of a linear function and an activation function, which ultimately determines which nodes in the following layer get activated.

- There are various types of neural networks, like ANNs, CNNs, and RNNs

Here at Datatron, we offer a platform to govern and manage all of your Machine Learning, Artificial Intelligence, and Data Science Models in Production. Additionally, we help you automate, optimize, and accelerate your ML models to ensure they are running smoothly and efficiently in production — To learn more about our services be sure to Book a Demo.